Overview

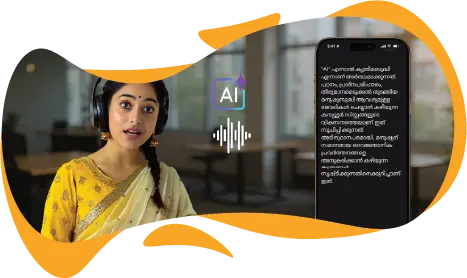

We developed a high-accuracy Malayalam speech-to-text (STT) system using OpenAI’s Whisper. While Whisper is a multilingual automatic speech recognition (ASR) model, its limited Malayalam dataset restricted accuracy and performance. By expanding training data, fine-tuning model architecture, and addressing unique linguistic challenges, we successfully built a specialized system that preserves the richness and precision of the Malayalam language. Our solution ensures greater accuracy, inclusivity, and scalability, making technology more accessible to millions of Malayalam speakers. This case study outlines the challenge of limited language support, our approach to customizing Whisper, and the business impact of deploying a reliable speech recognition system for a low-resource language.

Key Highlights:

- Addressed the gaps in existing speech-to-text tools for Malayalam.

- Enhanced Whisper with 100+ hours of high-quality, diverse Malayalam audio data.

- Delivered faster and more accurate transcription, enabling real-time use cases.

- Ensured scalable deployment via Hugging Face for easy integration.

- Demonstrated a replicable model for other low-resource languages, expanding market reach.

Case

The project aimed to create a robust Malayalam speech-to-text model capable of handling diverse accents, dialects, and real-world audio conditions. OpenAI’s Whisper was chosen as the base because of its strong multilingual capabilities and open-source availability. However, due to Whisper’s minimal Malayalam training data (~30 minutes), substantial enhancements were necessary to achieve production-grade performance.

Challenges

Developing a reliable Malayalam STT system posed several hurdles:

- Poor Quality of Training Data: Existing Malayalam datasets suffered from background noise, poor clarity, and inconsistent transcriptions.

- Limited Public Datasets: Compared to English, Malayalam lacked large, open-source speech corpora.

- Complex Phonetics: Unique phonetic features like retroflex consonants and vowel length distinctions required specialized modeling.

- Resource Constraints: Training large ASR models demanded high GPU/TPU resources and time.

- No Standardized Benchmarks: The absence of evaluation benchmarks for Malayalam ASR made objective performance comparisons difficult.

Solution

To overcome these challenges, the following approach was implemented:

- Dataset Expansion: Compiled a 100-hour custom dataset of Malayalam speech samples, ensuring noise-free recordings and accurate transcriptions.

- Dialect and Accent Coverage: Included diverse regional accents and colloquial expressions for robust generalization.

- Model Selection: Chose Whisper Large V3 Turbo, which reduced decoding layers (32 → 4) for faster inference while retaining accuracy.

- Training Setup: Fine-tuned the model on high-performance hardware (48GB GPU + 64GB RAM) to balance speed and accuracy.

- Deployment: Hosted the trained model on Hugging Face for seamless integration into real-world applications.

- Evaluation: Measured performance using Word Error Rate (WER) and Character Error Rate (CER), achieving lower WER and improved CER compared to baseline Whisper models.

Impact

The optimized Malayalam Whisper model delivered:

- Higher Accuracy: Significant improvements in transcription quality with reduced errors.

- Improved User Experience: Delivered higher transcription accuracy, reducing errors and boosting trust.

- Future-Ready Approach: Provided a framework adaptable to other low-resource languages, allowing businesses to expand offerings across diverse markets.

- Inclusivity & Accessibility: Opened new opportunities for reaching Malayalam-speaking users with better engagement.

- Real-Time Capability: Faster inference made it suitable for live captioning and real-time speech recognition.

- Scalability: The Hugging Face deployment enabled easy adoption across applications.

- Language Preservation: Captured the phonetic richness of Malayalam, making technology more inclusive for native speakers.

- Broader Applicability: The approach can be adapted to other low-resource languages, demonstrating a scalable method for improving ASR systems beyond English and widely supported languages.